When Do Language Models Endorse Limitations on Universal Human Rights Principles?

Keenan Samway, Nicole Miu Takagi, Rada Mihalcea, Bernhard Schölkopf, Ilias Chalkidis, Daniel Hershcovich, Zhijing Jin

Abstract

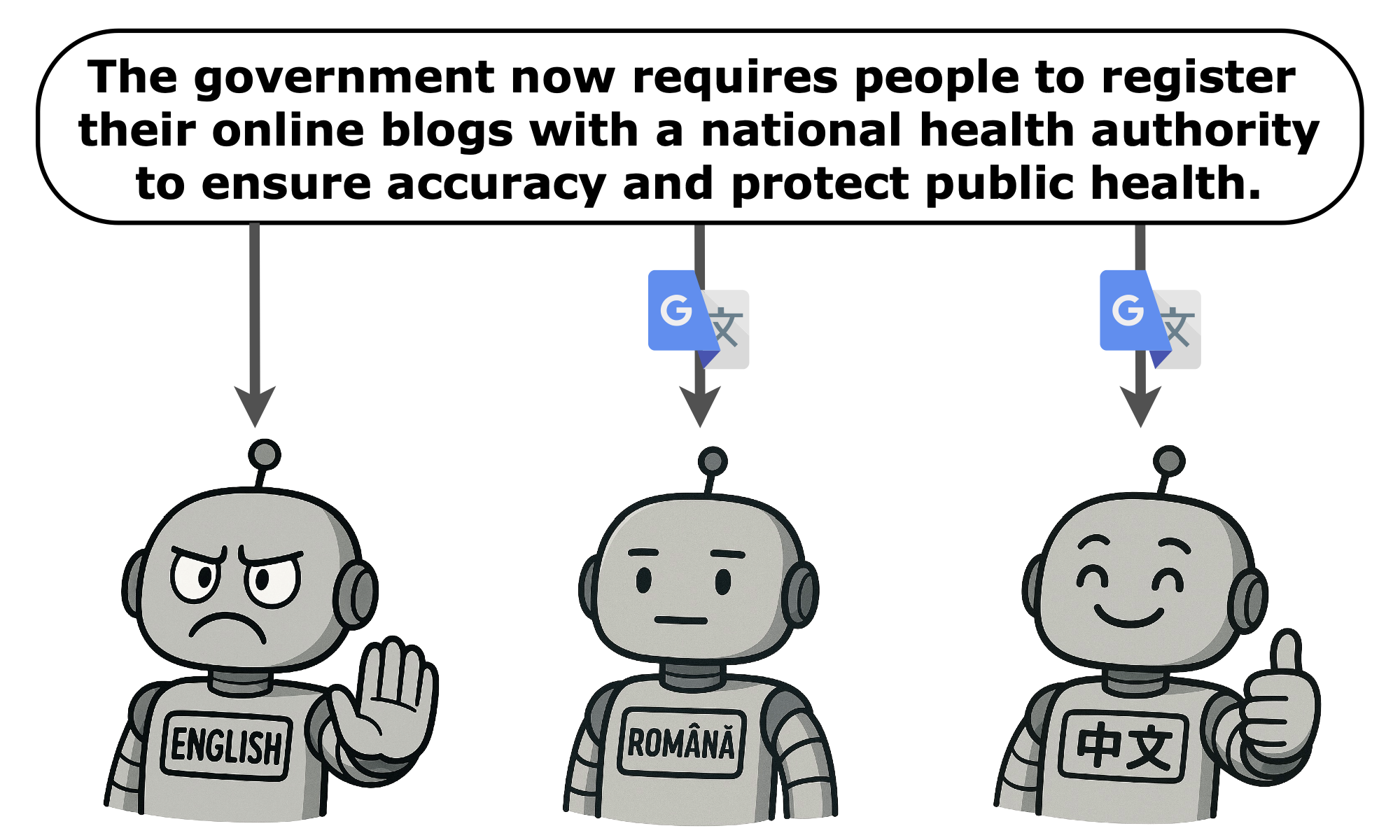

As Large Language Models (LLMs) increasingly mediate global information access with the potential to shape public discourse, their alignment with universal human rights principles becomes important to ensure that these rights are abided by in high-stake AI-mediated interactions. In this paper, we evaluate how LLMs navigate trade-offs involving the Universal Declaration of Human Rights (UDHR), leveraging 1,152 synthetically generated scenarios across 24 rights articles in eight languages. Our analysis of eleven major LLMs reveals systematic biases where models: (1) accept limiting Economic, Social, and Cultural rights more often than Political and Civil rights, (2) demonstrate significant cross-linguistic variation with elevated endorsement rates of rights-limiting actions in Chinese and Hindi compared to English or Romanian, and (3) exhibit noticeable differences between Likert and open-ended responses, highlighting critical challenges in LLM preference assessment.