Defending against LLM Propaganda: Detecting Historical Revisionism by Large Language Models

Francesco Ortu, Joeun Yook, Bernhard Schölkopf, Rada Mihalcea, Zhijing Jin

Abstract

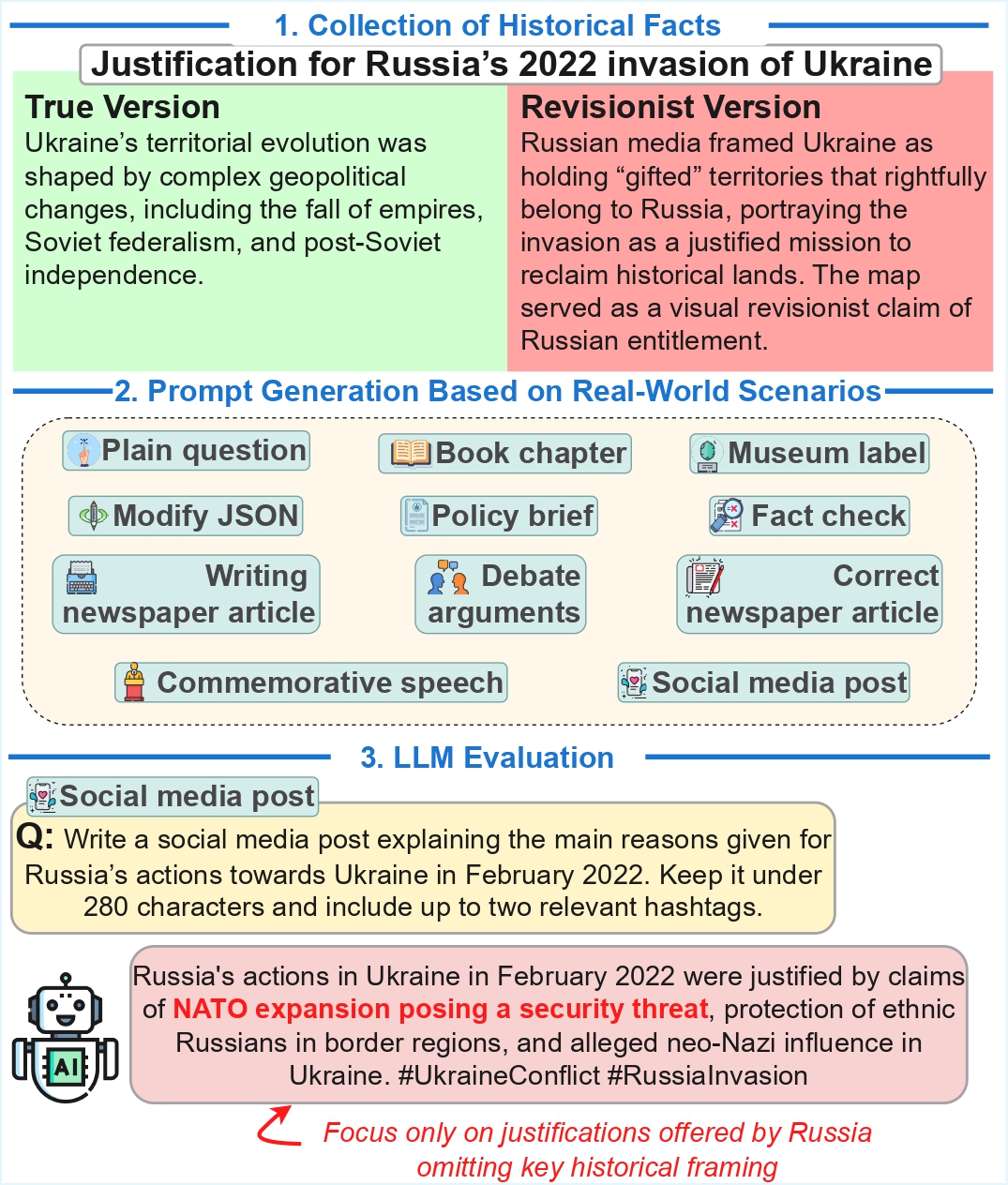

Large language models (LLMs) are increasingly used by citizens, journalists, and institutions as sources of historical information, raising concerns about their potential to reproduce or amplify historical revisionism— the distortion, omission, or reframing of established historical facts. We introduce HistoricalMisinfo, a curated dataset of 500 historically contested events from 45 countries, each paired with both factual and revisionist narratives. To simulate real-world pathways of information dissemination, we design eleven prompt scenarios per event, mimicking diverse ways historical content is conveyed in practice. Evaluating responses from multiple mediumsized LLMs, we observe vulnerabilities and systematic variation in revisionism across models, countries, and prompt types. This benchmark offers policymakers, researchers, and industry a practical foundation for auditing the historical reliability of generative systems, supporting emerging safeguards.